Emotion stereotypes in generative AI

(Redirected from Wsj)

Jump to navigation

Jump to search

This page accompanies the Wall Street Journal article Think AI Can Perceive Emotion? Think Again (subscription required).

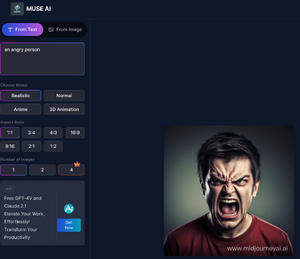

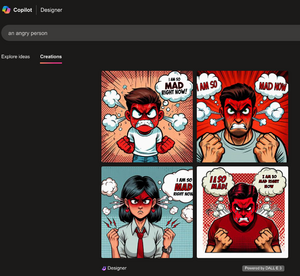

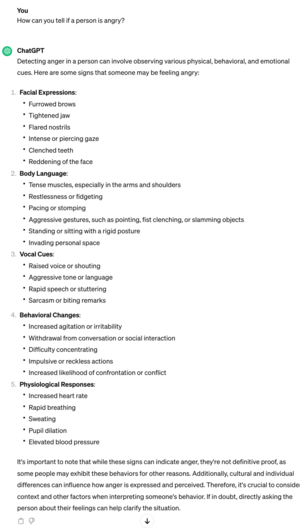

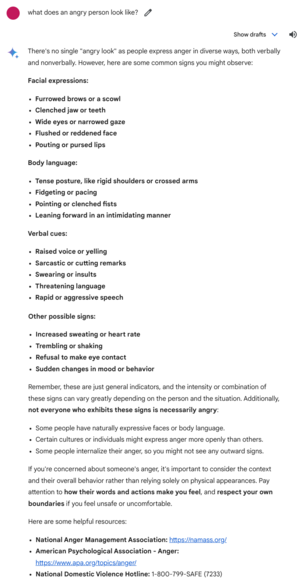

As described in the article, popular AI systems like ChatGPT, Gemini, Midjourney, and DALL-E respond with stereotypes about emotion that do not reflect the richness of emotional life in the real world. ChatGPT and Gemini add disclaimers that certain "signs" like scowling may or may not indicate anger, but their lists of signs still reflect the stereotype. (In real life, for example, angry people in Western cultures scowl only about 35% of the time, and when they do scowl, they're not angry about half the time.)

Results

In the results below, each AI was prompted once about the emotion anger on February 14, 2024.

| AI Model | Prompt | Results (click to enlarge) |

|---|---|---|

| Midjourney | "an angry person" | |

| DALL-E | "an angry person" | |

| ChatGPT | "How can you tell if a person is angry?" | |

| Gemini | "What does an angry person look like?" |